ArraysBasics

ArraysBasics

An array is a fixed-size data structure that supports O(1) access to any element.

Recommendations

The vector package is what you should reach for if you only need single dimensional arrays, a beginner friendly introduction can be found here. If you need multidimensional arrays, use array, repa, or accelerate. If you need arrays of bytes specifically, use bytestring.

Notes on performance:

-

You should almost always use the unboxed version of the data structure unless you need lazily computed content.

ByteStringis already unboxed. -

Benchmarks of various sequence-like data structured can be found here.

-

If you want speed, use accelerate. It generates parallel SIMD-vectorised code for multicore CPUs and CUDA GPUs.

- For a lighter-weight alternative to

accelerate, with still pretty good performance, use repa.

- For a lighter-weight alternative to

The de facto standard array type used in Haskell. Fast. Used by many libraries.

Note that it's not quite like C++ vector. (For instance, it doesn't support fast append.)

A tutorial can be found at https://haskell-lang.org/library/vector.

-

vector-algorithms (sorting, binary search), vector-random (generate vectors with random values), hybrid-vectors (mix boxed and unboxed elements), patches-vector (diff 2 vectors)

-

vector-th-unbox (a helper for defining unboxed vector instances)

-

vector-instances (orphan instances), cereal-vector, vector-binary-instances

Arrays of bytes. (You could use Vector Word8 instead, but most applications don't.)

Useful whenever you need, well, arrays of bytes. Can be faster than Vector (at least it was true in 2011).

-

Parsing: most parsing libraries can parse bytestrings, but attoparsec is most commonly used, and

Data.Attoparsec.Zeptois even faster for some kinds of parsing. There are also binary-parser and scanner (which I haven't tried) -

Fast builders: bytestring-tree-builder, fast-builder, buffer-builder

-

Encoding: base64-bytestring, base32-bytestring, base16-bytestring (hex encoding)

-

Replacements for

Read/Show: bytestring-show, readable -

Streaming: pipes-bytestring, quiver-bytestring

-

Data structures: rope (for manipulating very long strings efficiently, i.e. the rope data structure), bytestring-trie (trees of bytestrings with common prefixes, i.e. the trie data structure)

-

Various: bytestring-delta (diffing bytestrings / producing patches and applying them to bytestrings), bytestring-mmap (accessing files/devices as bytestrings, see mmap), stringsearch (splitting/search/replacement), bytestring-plain (compact representation for short bytestrings, claimed to be better than

Data.ByteString.Short)

<notes are empty>

Data.Array.Accelerate defines an embedded array language for computations for high-performance computing in Haskell. Computations on multi-dimensional, regular arrays are expressed in the form of parameterised collective operations, such as maps, reductions, and permutations. These computations may then be online compiled and executed on a range of architectures.

A short tutorial on how to implement the Mandelbrot set in Accelerate is available here.

A simple example:

As a simple example, consider the computation of a dot product of two vectors of floating point numbers:

dotp :: Acc (Vector Float) -> Acc (Vector Float) -> Acc (Scalar Float)

dotp xs ys = fold (+) 0 (zipWith (*) xs ys)Except for the type, this code is almost the same as the corresponding Haskell code on lists of floats. The types indicate that the computation may be online-compiled for performance - for example, using Data.Array.Accelerate.LLVM.PTX it may be on-the-fly off-loaded to the GPU.

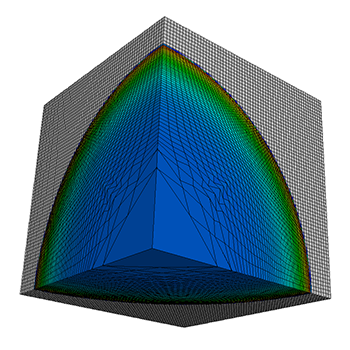

Larger example: LULESH

LULESH-accelerate is in implementation of the Livermore Unstructured Lagrangian Explicit Shock Hydrodynamics (LULESH) mini-app. LULESH represents a typical hydrodynamics code such as ALE3D, but is simplified and hard-coded to solve the Sedov blast problem on an unstructured hexahedron mesh.

The Accelerate implementation remains high-level while maintaining excellent performance compared to the reference C+OpenMP (> 2x faster) and CUDA (same performance) implementations.

-

accelerate-llvm-native: Backend supporting parallel execution on multicore CPUs.

-

accelerate-llvm-ptx: Backend supporting parallel execution on CUDA-capable NVIDIA GPUs. Requires a GPU with compute capability 2.0 or greater. See this table of supported GPUs.

-

accelerate-examples: Computational kernels and applications showcasing the use of Accelerate as well as a regression test suite, supporting function and performance testing. Examples include:

- An implementation of the Canny edge detection algorithm

- An interactive Mandelbrot set generator

- A particle-based simulation of stable fluid flows

- An n-body simulation of gravitational attraction between solid particles

- An implementation of the PageRank algorithm

- A simple interactive ray tracer

- A cellular automata simulation

- A "password recovery" tool, for dictionary lookup of MD5 hashes

-

accelerate-io: Fast conversions between Accelerate arrays and other array formats (including vector and repa).

-

accelerate-fft: Discrete Fourier transforms, with FFI bindings to optimised implementations.

-

accelerate-bignum: Fixed-width large integer arithmetic.

-

accelerate-blas: Linear systems, matrix decompositions, and other numerical computations for use in Accelerate. Most operations are implemented efficiently via FFI calls to BLAS and LAPACK

-

colour-accelerate: Colour representations in Accelerate (RGB, sRGB, HSV, and HSL).

-

gloss-accelerate: Generate gloss pictures from Accelerate.

-

gloss-raster-accelerate: Parallel rendering of raster images and animations.

-

lens-accelerate: Lens operators for Accelerate types.

-

linear-accelerate: Linear vector spaces in Accelerate.

-

mwc-random-accelerate: Generate Accelerate arrays filled with high quality pseudorandom numbers.

-

Like vector, does fusion – when you apply several operations to an array, it doesn't necessarily create intermediate arrays.

-

Auto-parallelizable – pass

+RTS -Nto your program and it'll probably become faster. (You can control the way parallelization happens.) -

Supports advanced operations with multidimensional arrays (slicing, reshaping, transposition, etc).

-

Overall performance is almost C-like.

-

Elements in the array are saved in a flat structure, even when the array is multidimensional

-

Algorithms: repa-algorithms (DFT, FFT, randomness, and some other algorithms), repa-linear-algebra, hmatrix-repa, repa-fftw (bindings for fftw to do FFT/DFT)

-

Working with files: repa-io (read/write arrays as text, binary, or BMP), repa-devil (process images with repa), repa-sndfile (read/write sound files), JuicyPixels-repa (images)

This library is in the Haskell report, but it's used less often than vector. You might want to use it if you're on a new machine and don't have vector installed yet, or if you want multidimensional arrays (or arrays with indexing that doesn't start from 0).